Lecture 23: Linear regression¶

This data-set of English Premier League (of soccer) players' information. We are interested in the relationship between height and weight.

import numpy as np

import csv

with open('epl.csv', 'r') as f:

reader = csv.reader(f)

data_list = list(reader)

print(data_list[0])

print(data_list[1])

print(data_list[2])

# number of data points

N = len(data_list) - 1 # remove 1 because first line was just labels

N

heights = np.zeros(N)

weights = np.zeros(N)

for i in range(1,N+1):

heights[i - 1] = data_list[i][5] # height was at index 5

weights[i - 1] = data_list[i][6] # weight was at index 6

print(heights[:10])

print(weights[:10])

import matplotlib.pyplot as plt

plt.scatter(heights, weights, alpha=0.2) # alpha makes the dots a little transparent

plt.show()

We would like to fit a line to this data. i.e.

Question Based on this information, what is the most likely linear relationship between the height and weight of a soccer player?

Since we are doing a linear model: if $x$ is height, then the weight $y$ should be

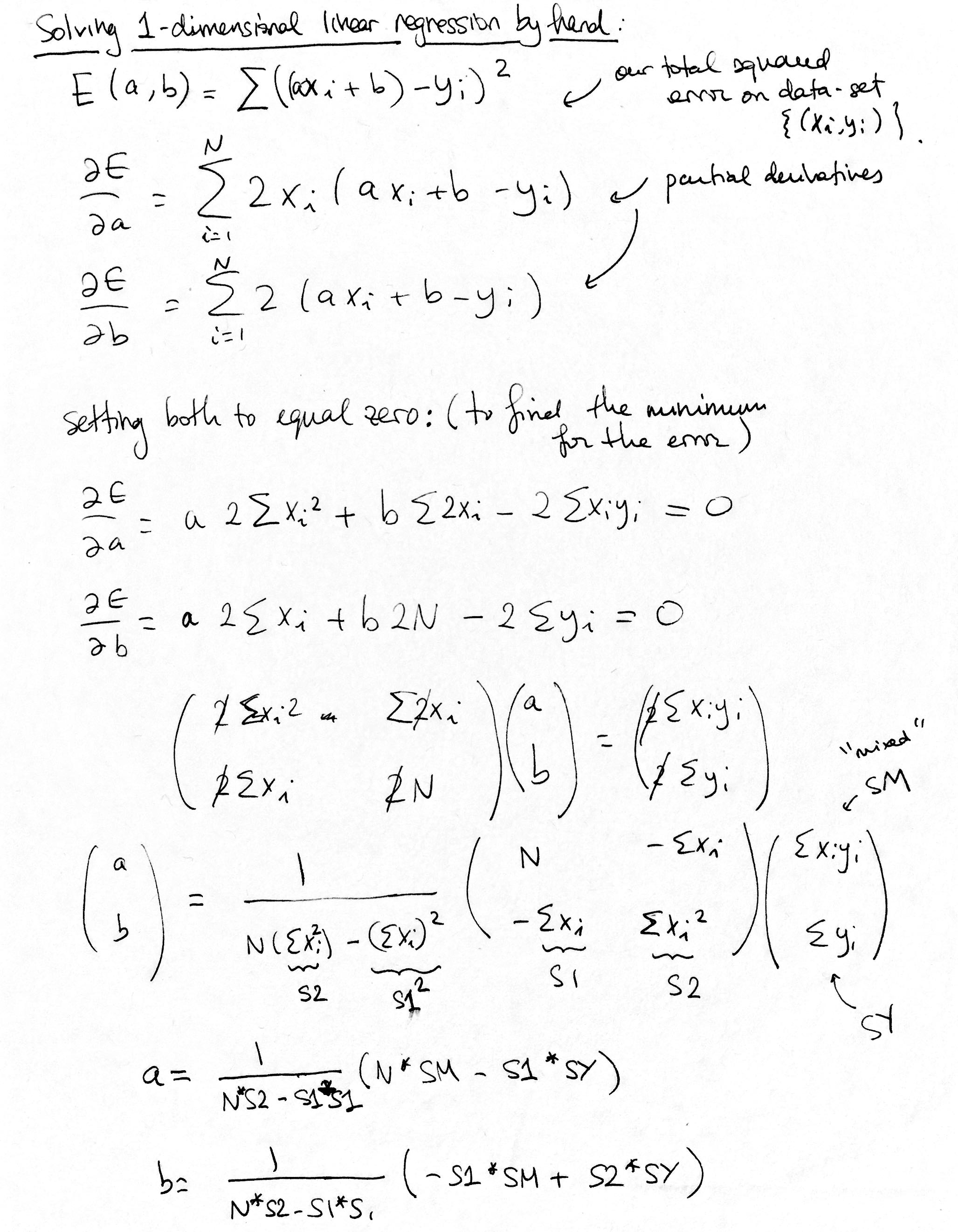

$$ y = ax + b.$$So we are looking for $a$ and $b$ that will fit the line as well as possible to the data. What does that mean though? It means that we want to minimize the error that our linear model $y = ax + b$ will have on predicting the weight from the height on our existing data. On a height-weight pair $(x_i, y_i)$ from our data-set, the model is guessing $y = ax_i + b$, and the actual answer is $y_i$. The total (squared) error is:

$$E(a,b) = \sum_{i=1}^{N} ((ax_i + b) - y_i)^2$$where $\{(x_i, y_i)\}$ are our height-weight pairs. ("Why squared error and not sum of absolute values?" you might say, both are good options, there are good reasons to go for squared error as the first choice, long story but it has something to do with the Gaussian distribution being similar to $e^{x^2}$)).

We want to minimize this squared error function above. Our options:

- Solve $\nabla E(a,b) = 0$. The gradient will be zero at a local minimum.

- Use gradient descent.

First method:¶

Solving the gradient = 0. You write down the partial derivatives and solve the linear equations for $a$ and $b$.

Let's code it up.

X = heights

Y = weights

S1 = np.sum(X)

S2 = np.sum(X * X) # sum of the squares

SM = np.sum(X * Y) # sum of x_i * y_i

SY = np.sum(Y)

a = (1 / (N*S2 - S1*S1)) * (N * SM - S1 * SY)

b = (1 / (N*S2 - S1*S1)) * (- S1 * SM + S2 * SY)

plt.scatter(heights, weights, alpha=0.3)

XX = np.linspace(60,85,200).reshape((-1,1))

YY = a * XX + b

plt.scatter(heights, weights, alpha=0.2)

plt.plot(XX,YY,"r")

plt.show()

This is called a closed-form solution because we are getting straight to the answer. (no gradient descent or approximation)

There is a similar soution for when you are trying to learn a linear approximation from a data-set:

$$\{ (x^{(i)}_1,...,x^{(i)}_n,y^{(i)}) \}$$where we would be trying to learn a function:

$$f(x_1,....,x_n)$$(e.g. learning the weight from height and age)

It's called the least squares method.

First method using the built-in library:¶

No need to be a hero every time. We can use sklearn's LinearRegression() model.

You train the parameters by using the fit function and you use the function it learns using the predict function.

from sklearn import linear_model

regr = linear_model.LinearRegression()

regr.fit(heights.reshape((N,1)), weights.reshape((N,1)))

XX = np.linspace(60,85,200).reshape((-1,1))

YY = regr.predict(XX)

plt.scatter(heights, weights, alpha=0.2)

plt.plot(XX,YY,"r")

plt.show()